Upload Endpoints

Description of the Resource Upload process.

Multiple Upload Endpoints

Resources can be uploaded via two endpoints. To upload a new top level resource they use the /targets/<target_id>/resources/ POST endpoint. To upload the resource to an existing container they use the /targets/<target_id>/resources/<resource_id>/ POST endpoint.

Overview

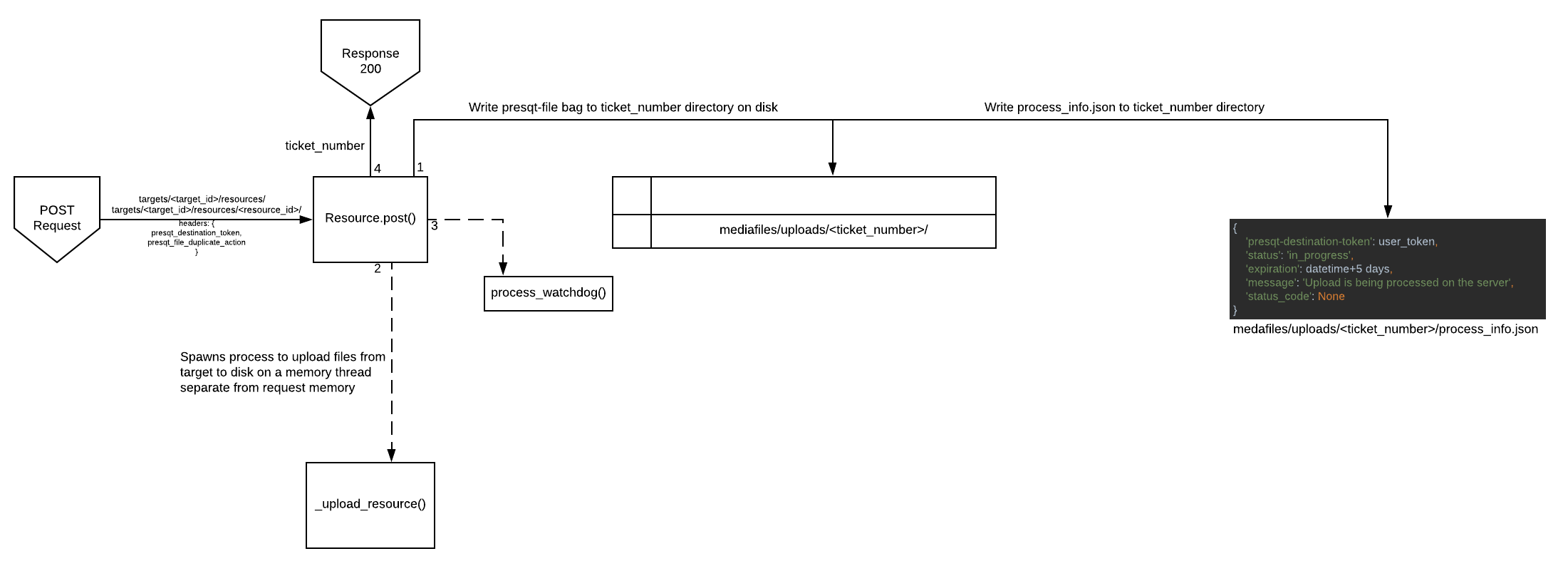

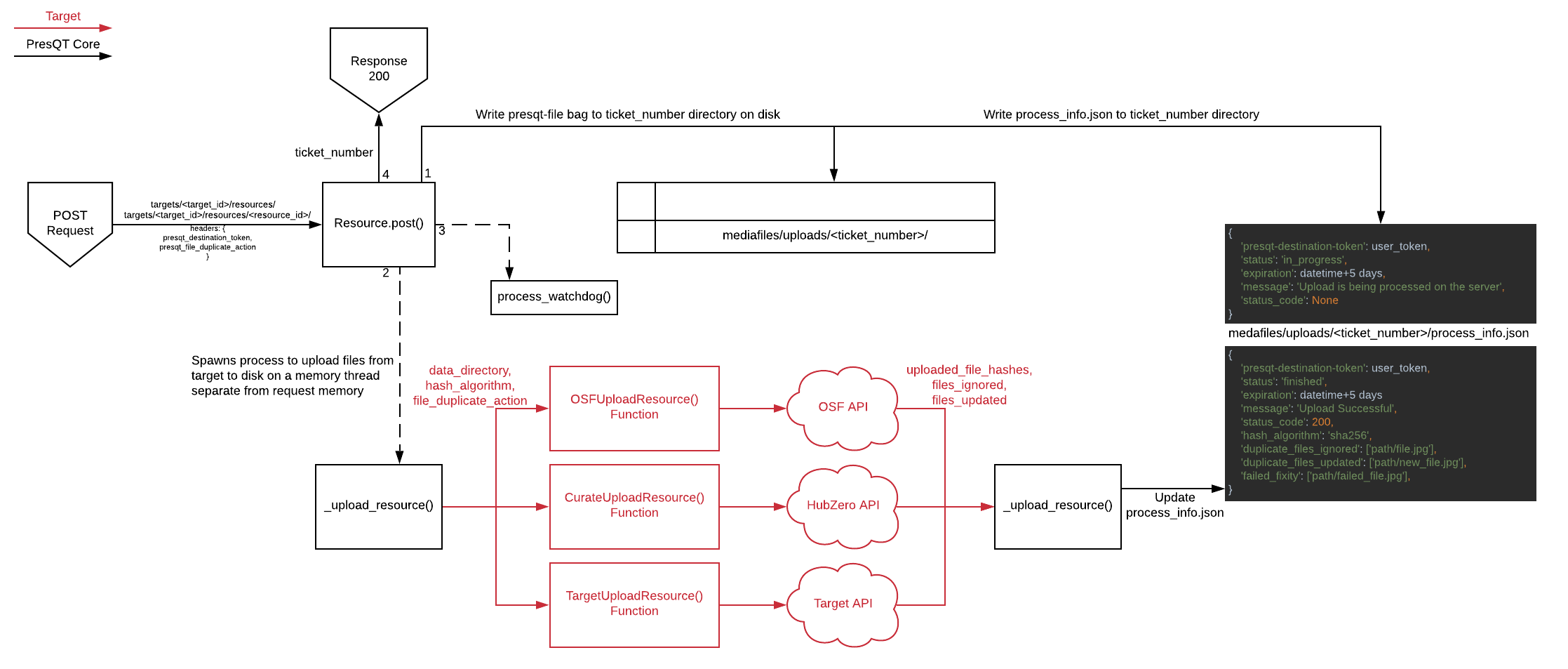

To handle server timeouts, PresQT spawns any resource upload off into a separate memory thread from the request memory. It creates a ticket number for a second endpoint to use to check in on the process. The full process can be seen in Image 1. Details of each process can be found below.

Request Memory Process

The /targets/<target_id>/resources/ and /targets/<target_id>/resources/<resource_id>/ endpoints prepare the disk for resource uploading by creating a ticket number (UUID) and writing a directory of the same name, mediafiles/uploads/<ticket_number>. It will then unzip the contents of the provided zip file into that directory. If the bag fails to validate after it has been written to the disk, then we will remove those files and attempt to write them again in case it was an IO error. If this write process fails 3 times, then the server returns an error. Also in the ticket_number directory, it creates a process_info.json file which will be the file that keeps track of the upload process progress:

The process_info.json file keeps track of various process data but its main use in this process is the 'status' key. It starts with a value of 'in_progress'. This is how we know the server is still processing the upload request. So at this point we have a directory that looks like this:

mediafiles

uploads

<ticket_number>

Zip_file_bag

data

Project_to_upload

folder_to_upload

file_to_upload.txt

manifest-sha512.txt

bagit.txt

bag-info.txt

process_info.json

We know fixity has remained while saving these resources to disk because the bag has validated so now we need to make sure we have hashes using an algorithm that the Target will also use. If the Target supports an algorithm used in the bag we simply get those hashes from the bag otherwise we generate new hashes using a Target supported hashing algorithm. These hashes will be used to compare against the hashes given to us by the Target after upload.

Now that we have the files saved to disk and their hashes, we spawn the upload process off into a different memory thread so it can be completed without a timeout sent back through the request. The spawned off function is _resource_upload(). It then returns a 200 response with the ticket number in the payload back to the front end. The full request memory flow can be found below in Image 3.

Server Memory Process

We now have the _resource_upload() function running separately on the server. This function will go to the appropriate target upload function and upload the resources using the target API. If all files are uploaded successfully, then the hashes brought back from the target are compared with the hashes we calculated earlier. Any files that failed fixity are kept track of in the key 'failed_fixity' in process_info.json. Duplicate files that were ignored and updated are also kept track of in process_info.json. The following are the possible states of process_info.json after uploading has completed:

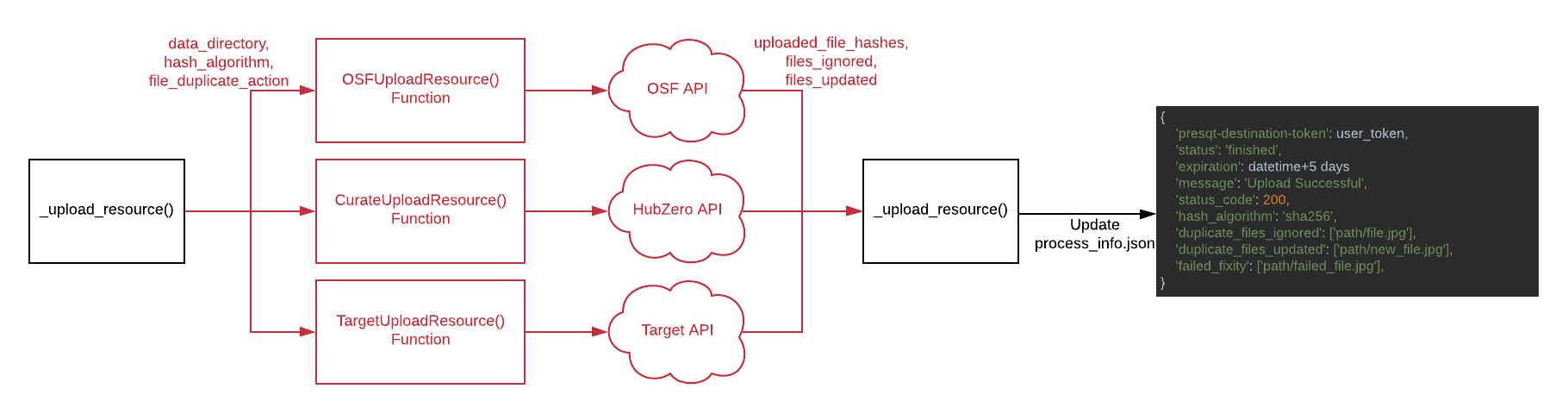

The full flow of the resource upload in server memory can be found below in Image 7:

Upload Job Check-In Endpoint

The /upload/<ticket_number>/ GET endpoint will check in on the upload process on the server to see its status. It uses the ticket_number path parameter to find the process_info.json file in the corresponding folder, mediafiles/uploads/<ticket_number>/process_info.json .

If the status is 'in_progress', it will return a 202 response along with a small payload.

If the status is 'failed', it will return a 500 response along with the failure message and failure error code.

If the status is 'finished', it will return a 200 response along with a small payload.

The full flow for this endpoint can be found on Image 8 below:

Process Watchdog

When uploading, a watchdog function is also spawned away from request memory. The purpose of it is to kill any processes that are taking too long. Right now, we say all upload processes have up to an hour to finish before the watchdog will kill the process. If this time limit is hit then after it kills the process the watchdog also updates the process_info.json file to the following:

Upload API Endpoints

pageResource EndpointspageUpload Job EndpointsLast updated